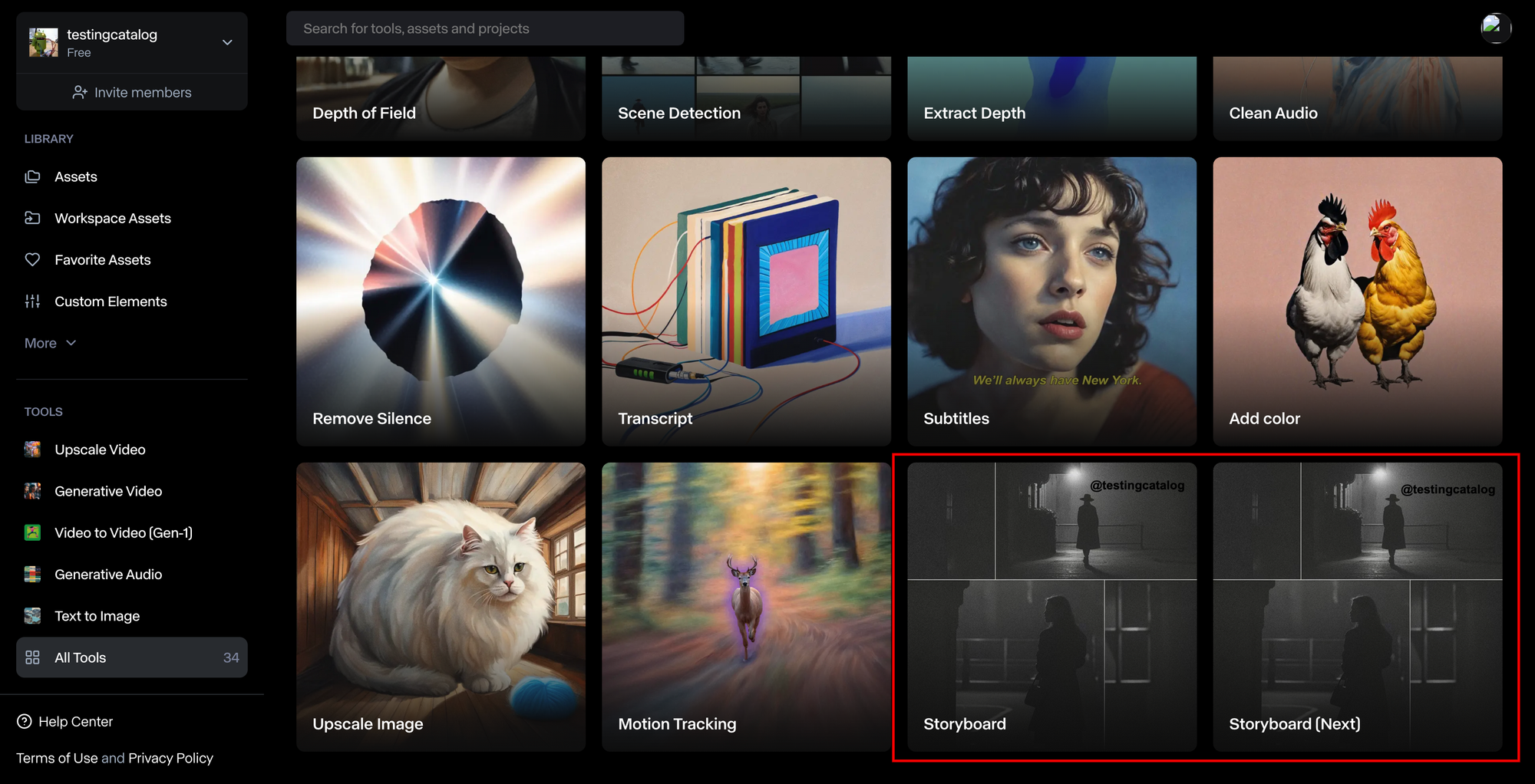

The latest development from Runway involves two different storyboard prototypes, each offering unique functionalities and user experiences. Here's a breakdown of these prototypes and their potential implications:

Storyboard Prototypes

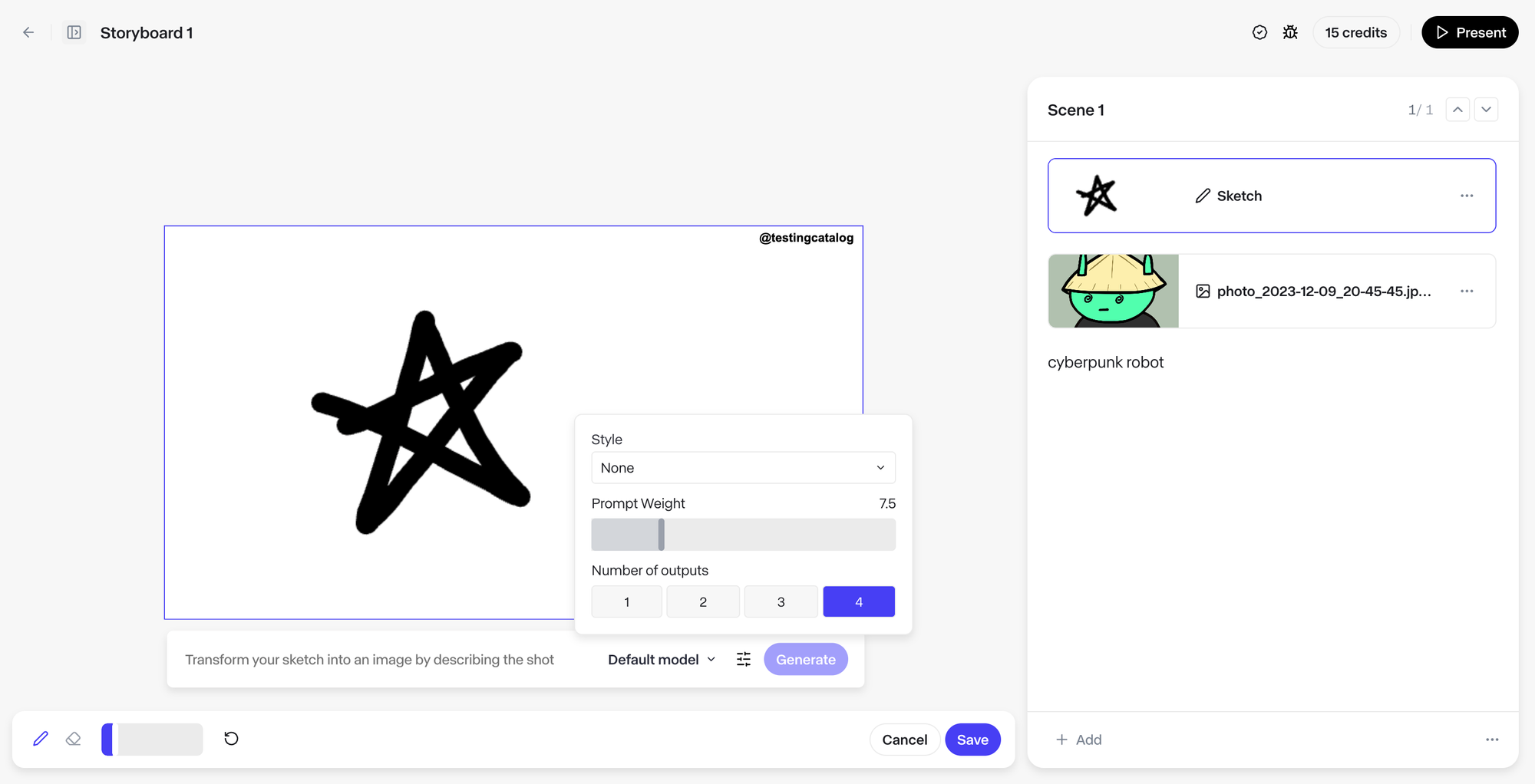

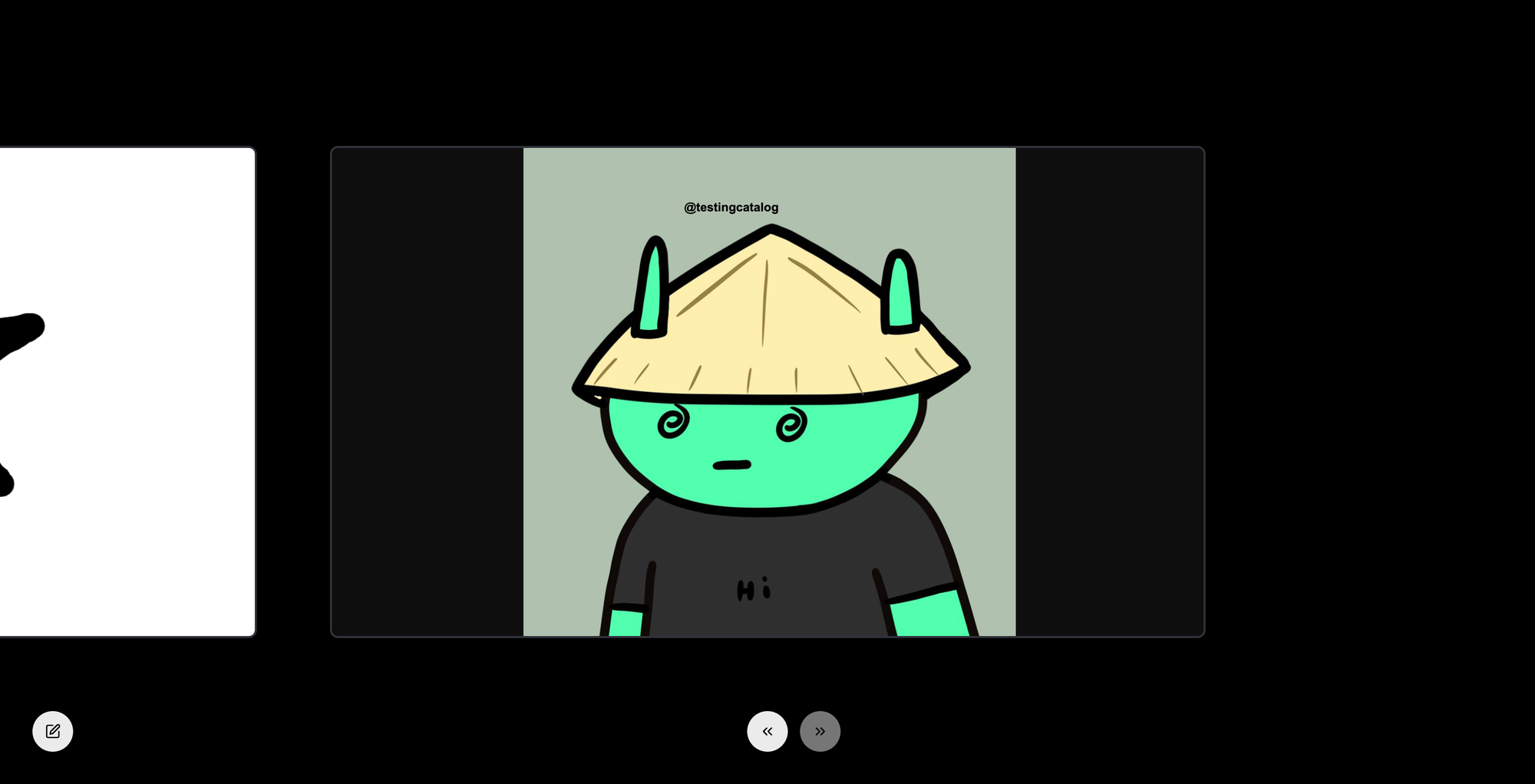

Storyboard 1: This version allows users to draw and transform their drawings into images. These images can then be animated using the Gen-2 model. The results can be previewed in a gallery, enabling users to view each generation individually.

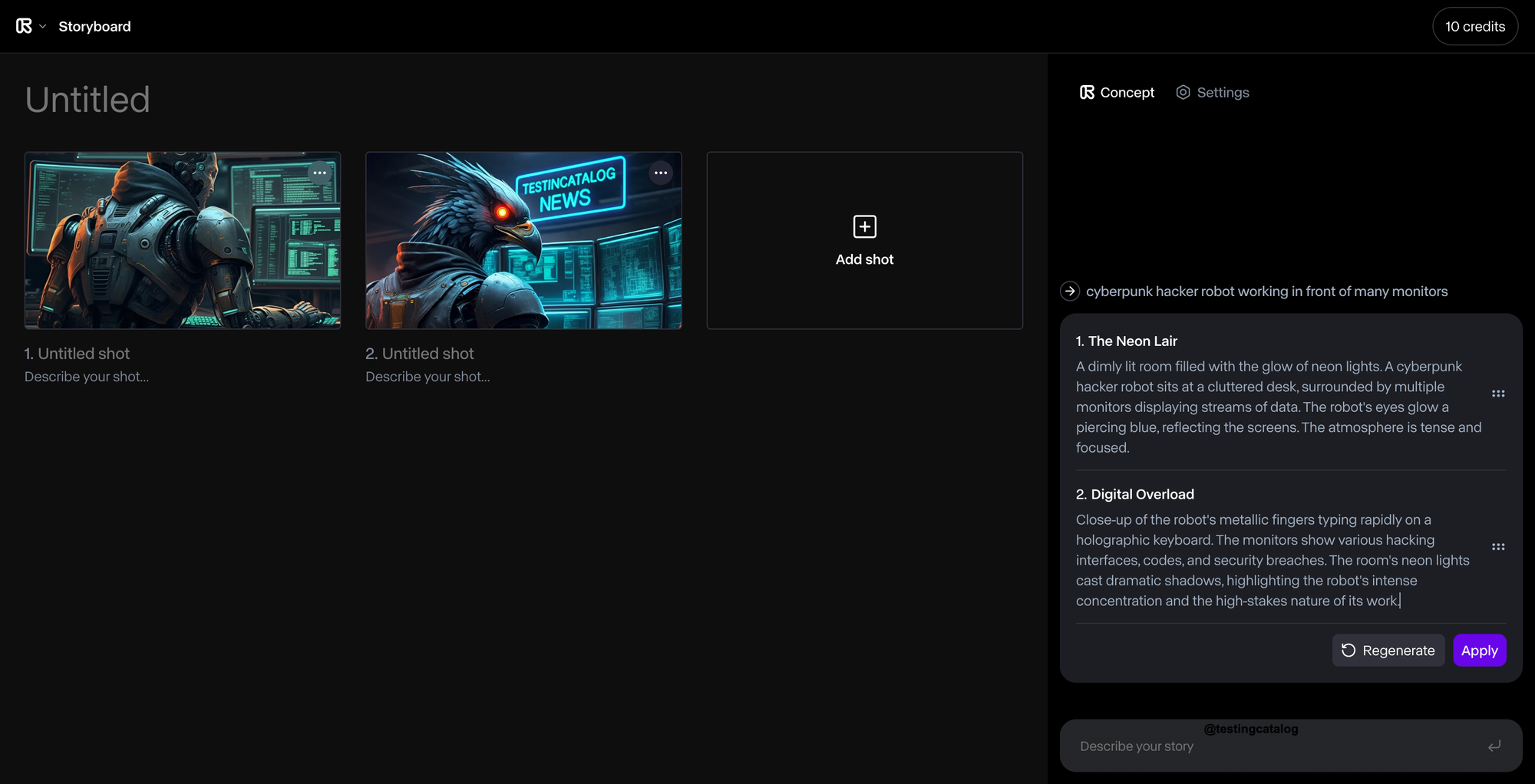

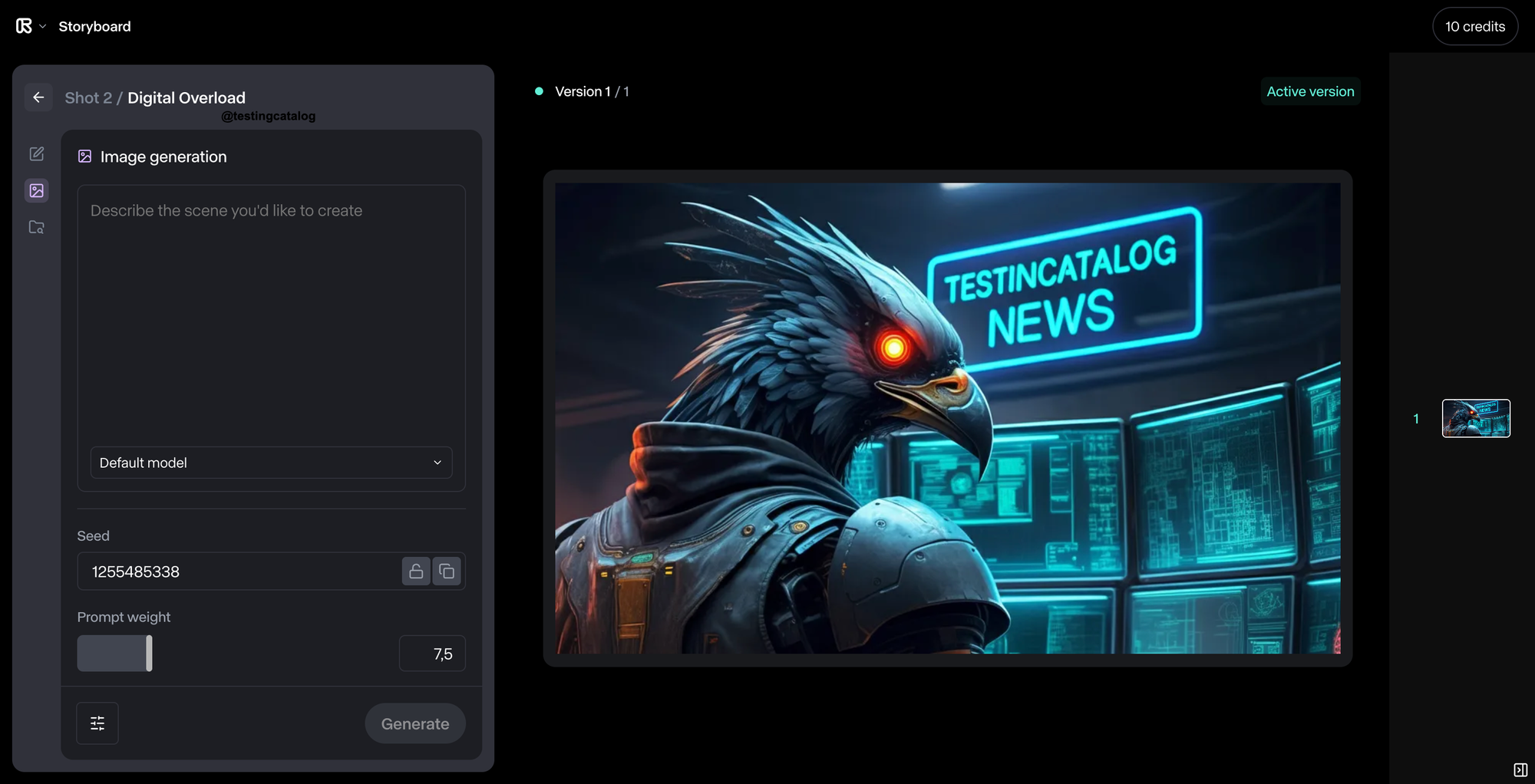

Storyboard 2: This prototype features a different UI where users can generate text prompts for uploaded or generated images. These images can be regenerated afterwards, but there is no preview mode available in this version.

Key Features and Differences

- Drawing and Animation: The first storyboard emphasizes drawing and animation capabilities, leveraging the Gen-2 model for video generation.

- Text Prompt Generation: The second storyboard focuses on generating text prompts for images, allowing for regeneration but lacking a preview mode.

- Prototype Nature: Both concepts appear to be early prototypes, not necessarily designed to produce a single video at this stage. This suggests that Runway is still in the experimental phase, refining these tools for future release.

Comparison with VideoFX Storyboard

- VideoFX Storyboard: Google's VideoFX tool also features a storyboard capability, which allows users to create multiple images and then generate a video based on these images, telling a story. This feature relies on the Imagen3 model for image generation and includes audio generation capabilities.

- Runway vs. VideoFX: While both Runway and VideoFX offer storyboard functionalities, the approaches differ. Runway's prototypes focus on drawing and text prompt generation, whereas VideoFX integrates image and audio generation into its storyboard feature.