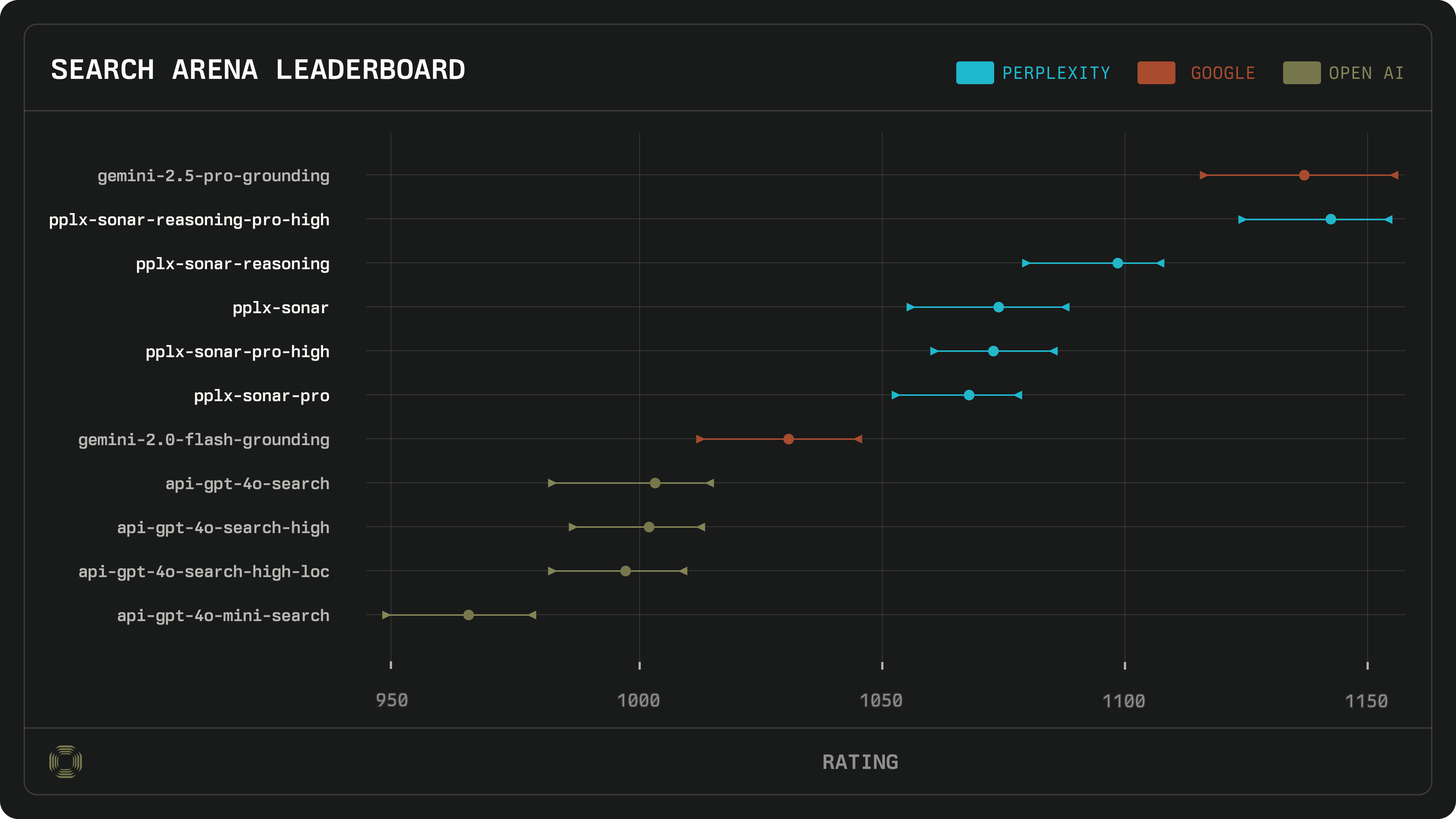

Perplexity has announced that its Sonar-Reasoning-Pro-High model has achieved a top ranking in the latest LM Arena Search Arena evaluation, tying for first place with Google’s Gemini-2.5-Pro-Grounding. The evaluation, conducted between March 18 and April 13, 2025, compared 11 leading search-augmented large language models using over 10,000 human preference votes on real-world queries spanning coding, writing, research, and recommendations. Sonar-Reasoning-Pro-High not only matched Gemini-2.5-Pro-Grounding in overall score but also outperformed it in direct head-to-head comparisons 53% of the time. Perplexity’s Sonar models secured the top four leaderboard positions, surpassing both Google and OpenAI’s latest web search models.

The Sonar family of models is specifically optimized for deep online search and reporting, with a focus on providing longer, more comprehensive responses and citing 2-3 times more sources than comparable models. This depth of search and robust citation practice were key factors in user preference, as confirmed by the evaluation’s findings. Sonar models are available to Perplexity Pro users and via the Sonar API, which offers flexible pricing and search modes for businesses and developers. The API allows integration of generative AI search into third-party applications, with a basic tier for cost-effective solutions and a Pro tier for more advanced, detailed queries.

Perplexity, the company behind Sonar, has positioned itself as a leader in AI-powered search by prioritizing accuracy, transparency, and comprehensive source attribution. The company continues to expand its offerings, including hosting an API overview session with co-founder and CTO Denis Yarats on April 24, 2025, to discuss benchmarks and use cases. This achievement in the Search Arena evaluation underscores Perplexity’s commitment to delivering high-quality, reliable AI search tools for both end users and enterprise clients.