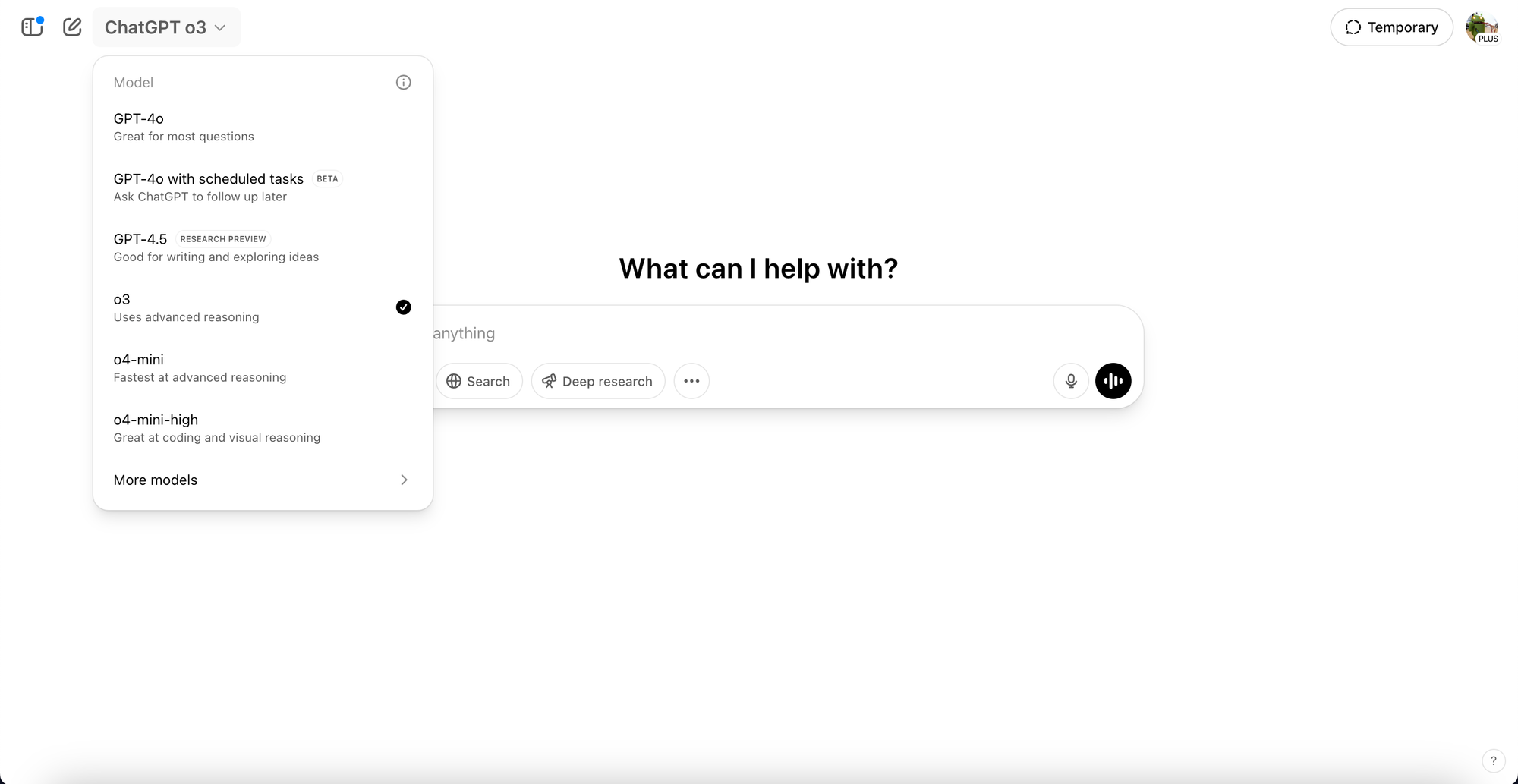

OpenAI released o3 and o4‑mini on 16 April 2025. Aimed at ChatGPT users and developers, the models decide when to invoke web search, Python, file analysis, or image generation tools, finishing multi‑step tasks in under a minute. They also “think with images,” accepting sketches or screenshots and adjusting them during reasoning. O3 sets new highs on Codeforces and SWE‑bench and makes 20% fewer major errors than o1, while the leaner o4‑mini scores 99.5% pass‑at‑1 on the 2025 AIME with tools and offers higher rate limits. Pro and Team subscribers get both models today; Enterprise and Edu tiers follow in a week, and free users can sample o4‑mini through a new “Think” mode. API access is live for verified developers.

Training used an order‑of‑magnitude more reinforcement‑learning compute and a refreshed refusal set covering biorisk and malware. OpenAI’s safety monitor caught 99% of red‑team biorisk prompts, and the firm says both models stay below the Preparedness Framework’s “High” risk bar. Analysts at TechCrunch and VentureBeat argue the release shows reasoning agents merging with chat platforms, while Business Insider and Gary Marcus warn that benchmarks can mislead and that o3 falls short of AGI.

Founded in 2015, OpenAI introduced the o‑series last September. By positioning o3 and o4‑mini as faster, cheaper successors to o1 and o3‑mini—and teasing an o3‑pro rollout—the company is blending its reasoning line with the wider GPT roadmap ahead of GPT‑5.