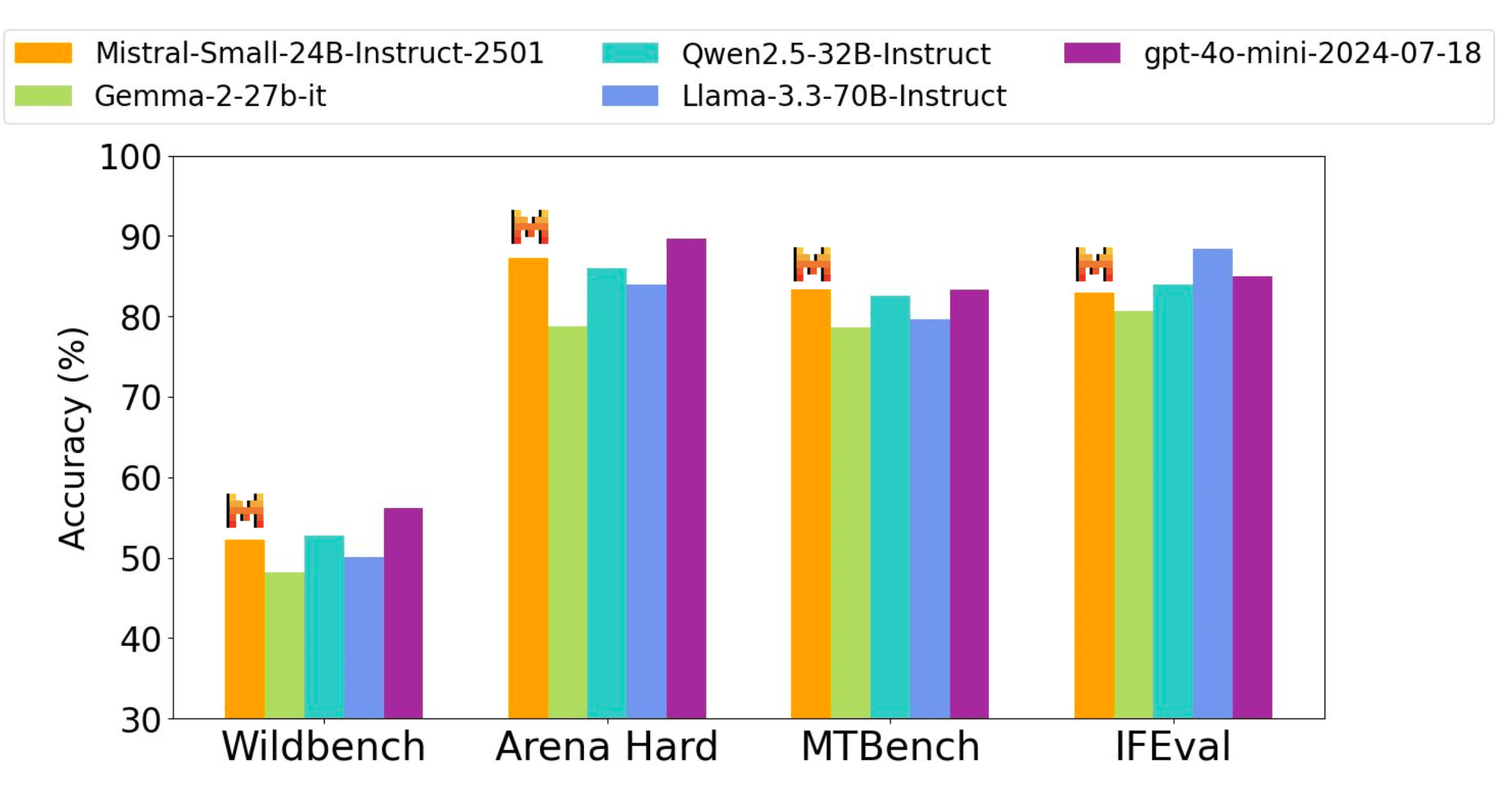

Mistral AI has officially released Mistral Small 3, a 24-billion-parameter open-source language model designed for high efficiency and low latency. Announced on January 30, 2025, the model is available under the Apache 2.0 license, allowing developers to freely modify, deploy, and integrate it into various applications. The release positions Mistral Small 3 as a competitive alternative to larger models like Meta’s Llama 3.3 70B and Alibaba’s Qwen 32B, while offering over three times faster performance on the same hardware.

Mistral Small 3

Mistral Small 3 is tailored for 80% of generative AI tasks, focusing on robust language and instruction-following capabilities with minimal latency. Key technical highlights include:

- Size and Performance: With 24 billion parameters, it achieves an accuracy of over 81% on the MMLU benchmark and processes up to 150 tokens per second.

- Efficiency: The model's lean architecture reduces layers, improving inference speed and making it suitable for local deployment on hardware like an Nvidia RTX 4090 or a MacBook with 32GB RAM.

- Licensing: Distributed under Apache 2.0, it is fully open-source and commercially viable.

- Pretraining Approach: Unlike some competitors, Mistral Small 3 was not trained with reinforcement learning (RL) or synthetic data, making it an earlier-stage model ideal for fine-tuning.

Use Cases

Mistral Small 3 is optimized for:

- Conversational AI: Virtual assistants requiring fast response times.

- Low-Latency Automation: Function execution in workflows or robotics.

- Domain-Specific Expertise: Fine-tuning for specialized fields like healthcare diagnostics or legal advice.

- Local Inference: Secure deployments for organizations handling sensitive data.

Industries already exploring its potential include financial services (fraud detection), healthcare (triage systems), and manufacturing (on-device command and control).

Availability

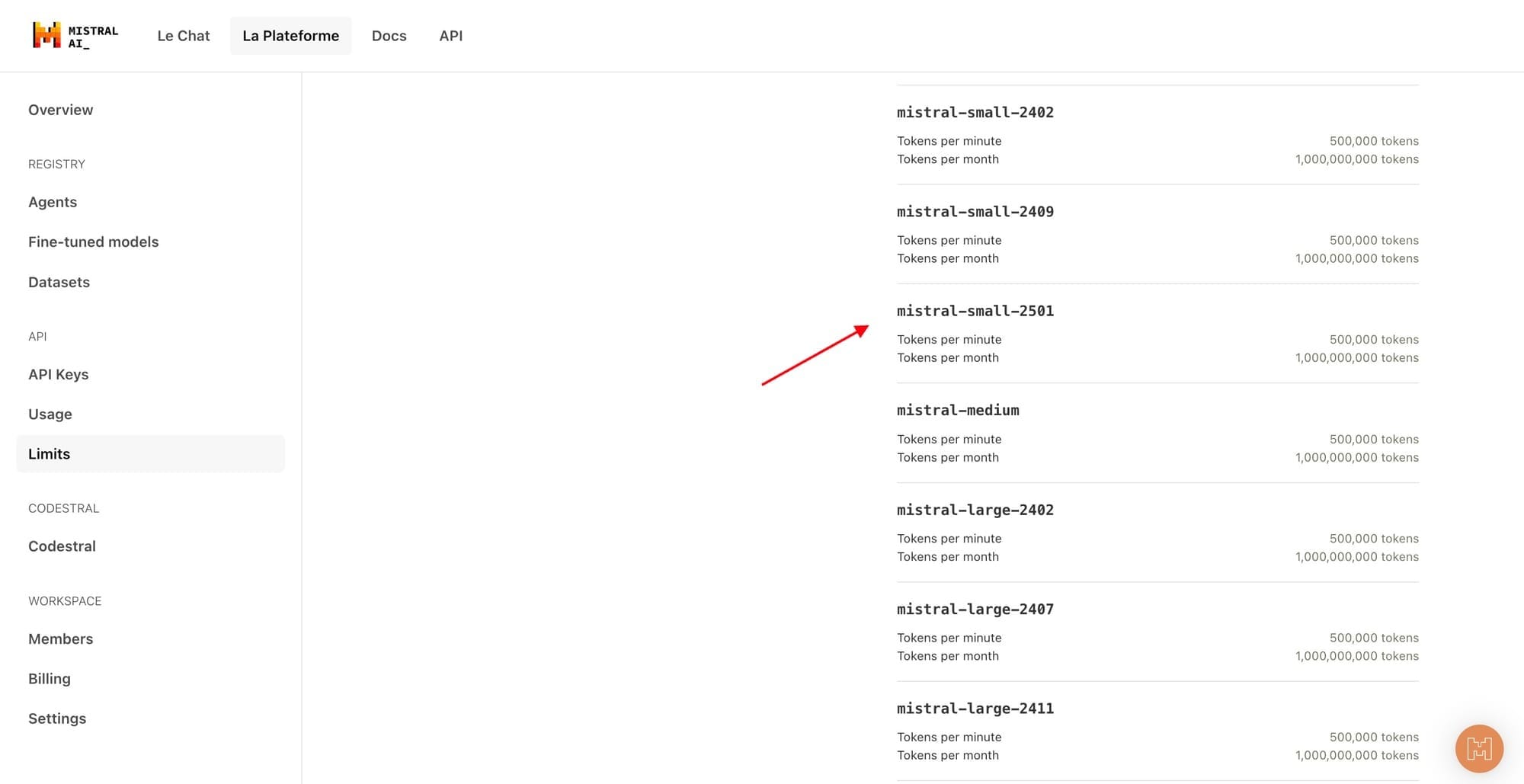

The model is accessible through platforms such as Hugging Face, Ollama, Kaggle, Together AI, and Fireworks AI. It will soon be integrated with NVIDIA NIM, Amazon SageMaker, Databricks, and other enterprise platforms.

About Mistral AI

Founded in France by former researchers from Google DeepMind and Meta AI, Mistral AI has emerged as a key player in open-source AI development. The company has previously introduced models like Mistral 7B and is committed to advancing open-source innovation with tools that balance performance and accessibility.

Industry Impact

Mistral Small 3 represents a significant step toward democratizing AI by offering high-performing models that rival proprietary solutions like OpenAI’s GPT-4o mini. Its release underscores the growing trend of smaller yet highly capable models that prioritize speed, cost-efficiency, and local deployment options.