Meta Connect 2024 introduced a series of exciting announcements spanning AI, AR, and VR.

LLAMA 3.2 Models

Prior to the keynote, leaks revealed that Meta would launch the LLAMA 3.2 models, featuring 1, 3, 11, or 90 billion parameters. During the event, Meta referred to LLAMA models as the “Linux for AI,” highlighting their open-source nature and broad accessibility. LLAMA 3.2 also stands out as a multi-modal model, capable of processing text, images, videos, and audio.

However, due to EU regulations, only the text-based version of LLAMA 3.2 will be available in Europe, following the trend where most Meta AI products remain unavailable in the EU.

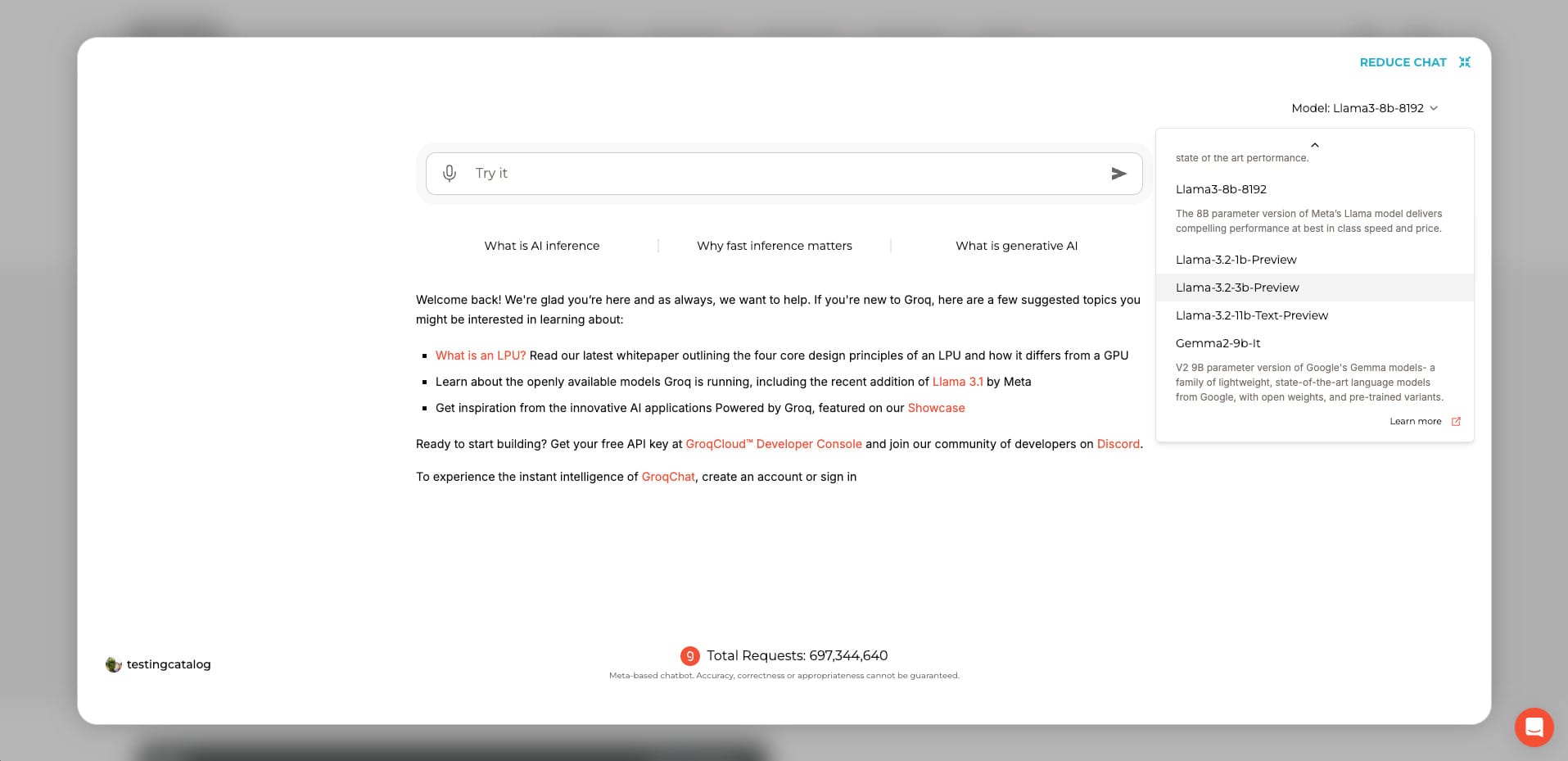

Llama 3.2 Model Release

The LLAMA 3.2 model was made available shortly after the keynote on Grok. Users could try it right away, though only the text-based version was released, without the multi-modal capabilities.

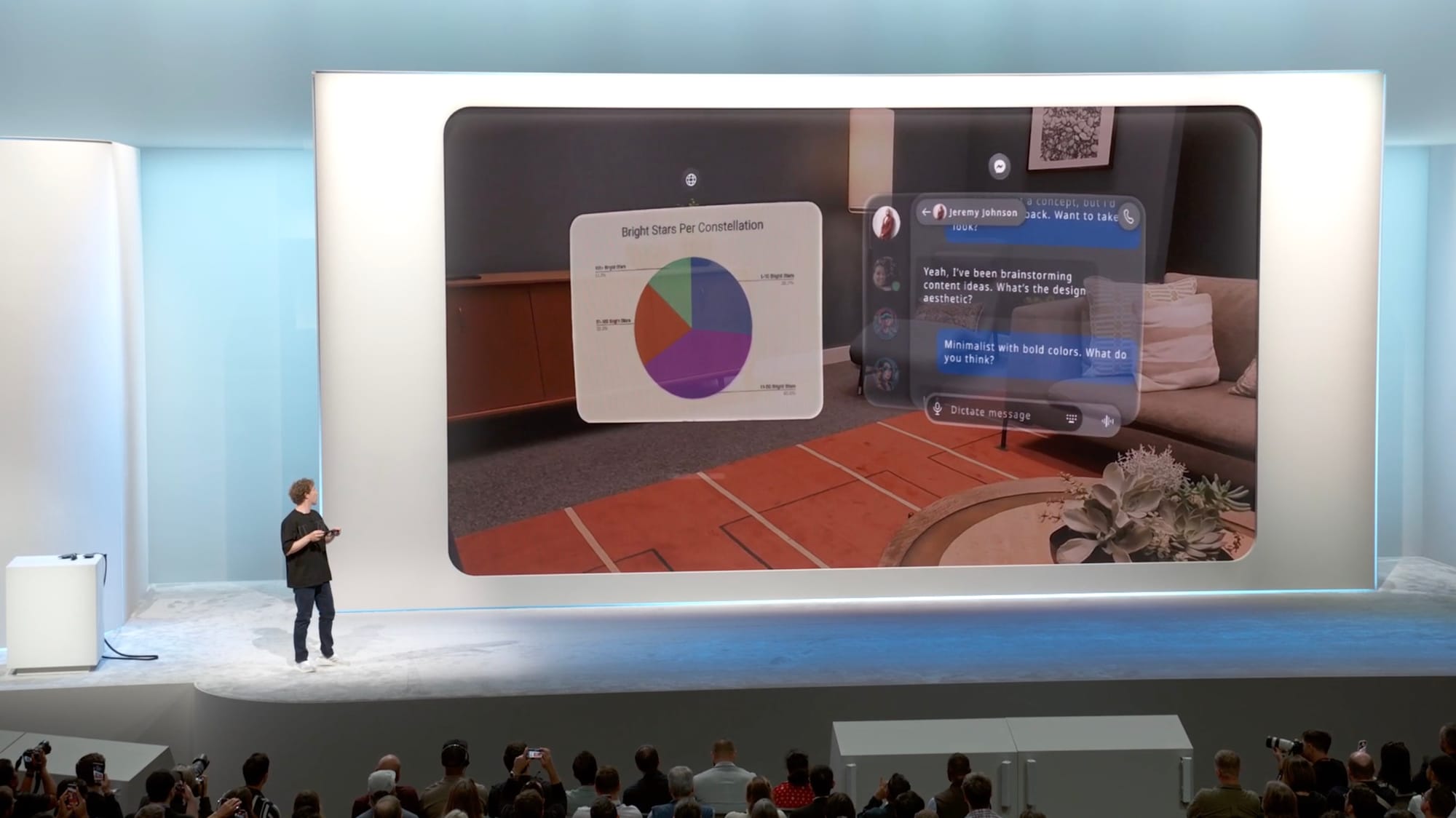

MetaQuest and New VR Features

The keynote kicked off with the introduction of MetaQuest and a range of new VR functionalities, then transitioned to the AI features coming to Meta’s core apps: Instagram, WhatsApp, and Messenger.

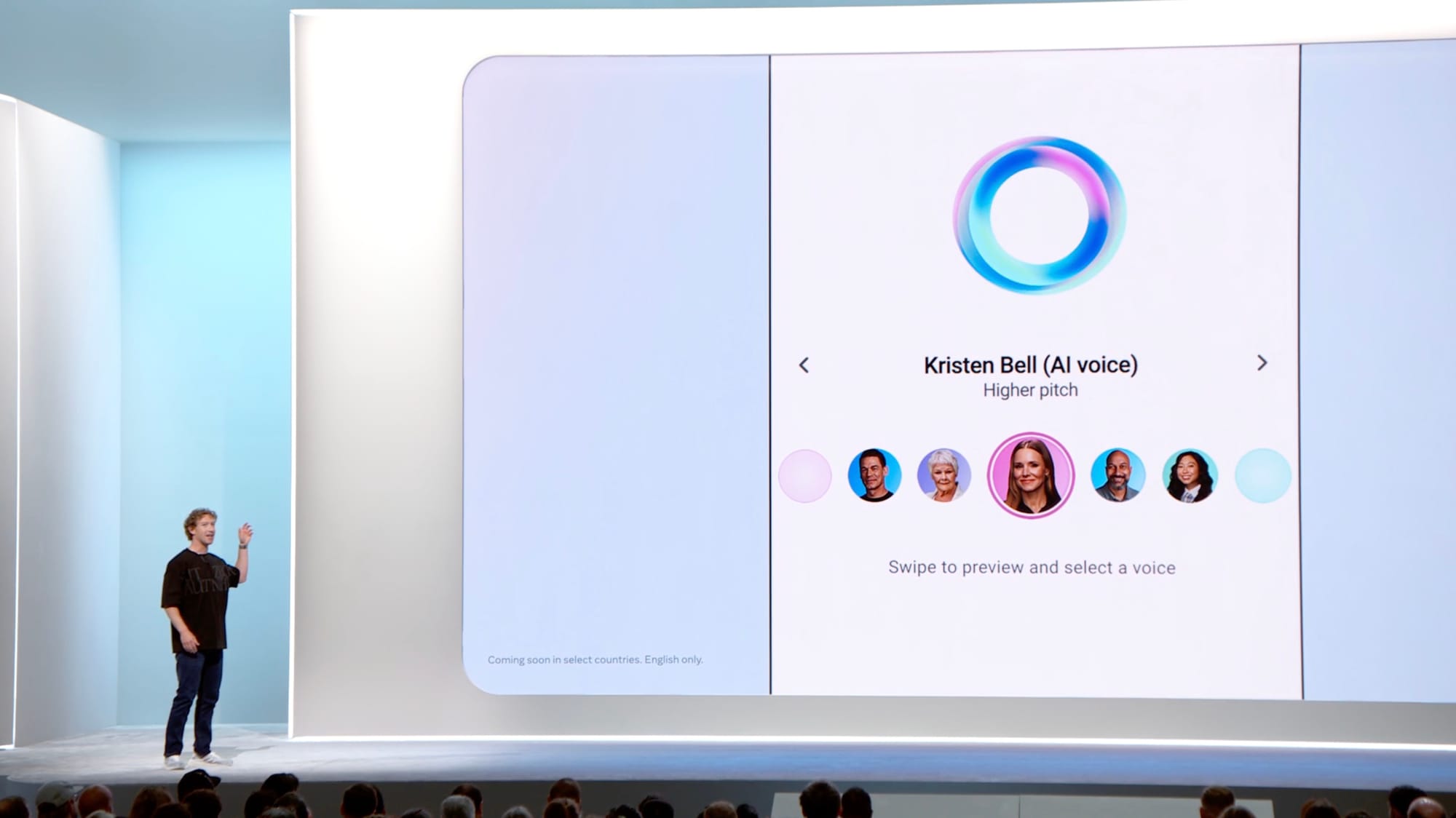

Voice Mode for Meta AI

One of the major announcements was the rollout of a voice mode for Meta AI across Instagram, WhatsApp, Messenger, and Facebook. This voice UI will be accessible via a bottom sheet, which looks to be Meta’s response to OpenAI’s recently released advanced voice mode. While Meta AI’s voice features aren’t as advanced as OpenAI’s, they will still compete with Gemini Live, another recently launched feature from this month.

AI-Generated Video Avatars

Mark Zuckerberg later demonstrated AI-engineered video avatars, engaging in a live conversation with an AI-generated avatar on stage. This was clearly Meta’s answer to similar video model innovations announced by OpenAI and Gemini. The twist here is that Meta seems poised to introduce AI-generated avatars, taking a direction similar to Character AI’s approach.

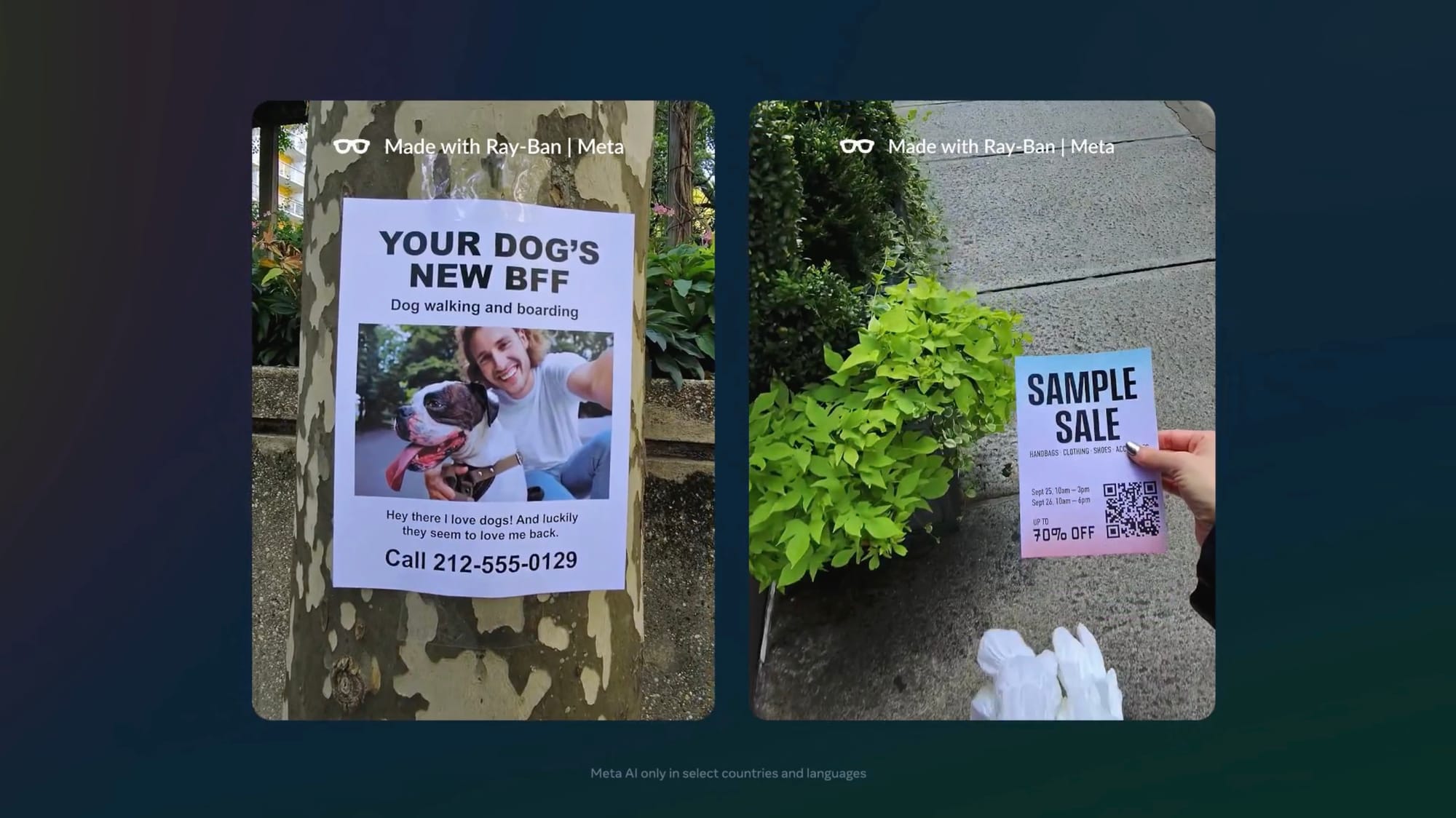

Reels Translation & Meta Glasses Integration

Next, Meta showcased live translations for Reels, alongside enhanced control integrations for Meta Glasses with Spotify, Amazon Music, Audible, and other apps. Meta also demonstrated the glasses’ ability to recognize images and leverage video multimodality. New models of Meta Glasses were introduced, including a limited edition with transparent frames that reveal the internal tech, such as wires and microchips.

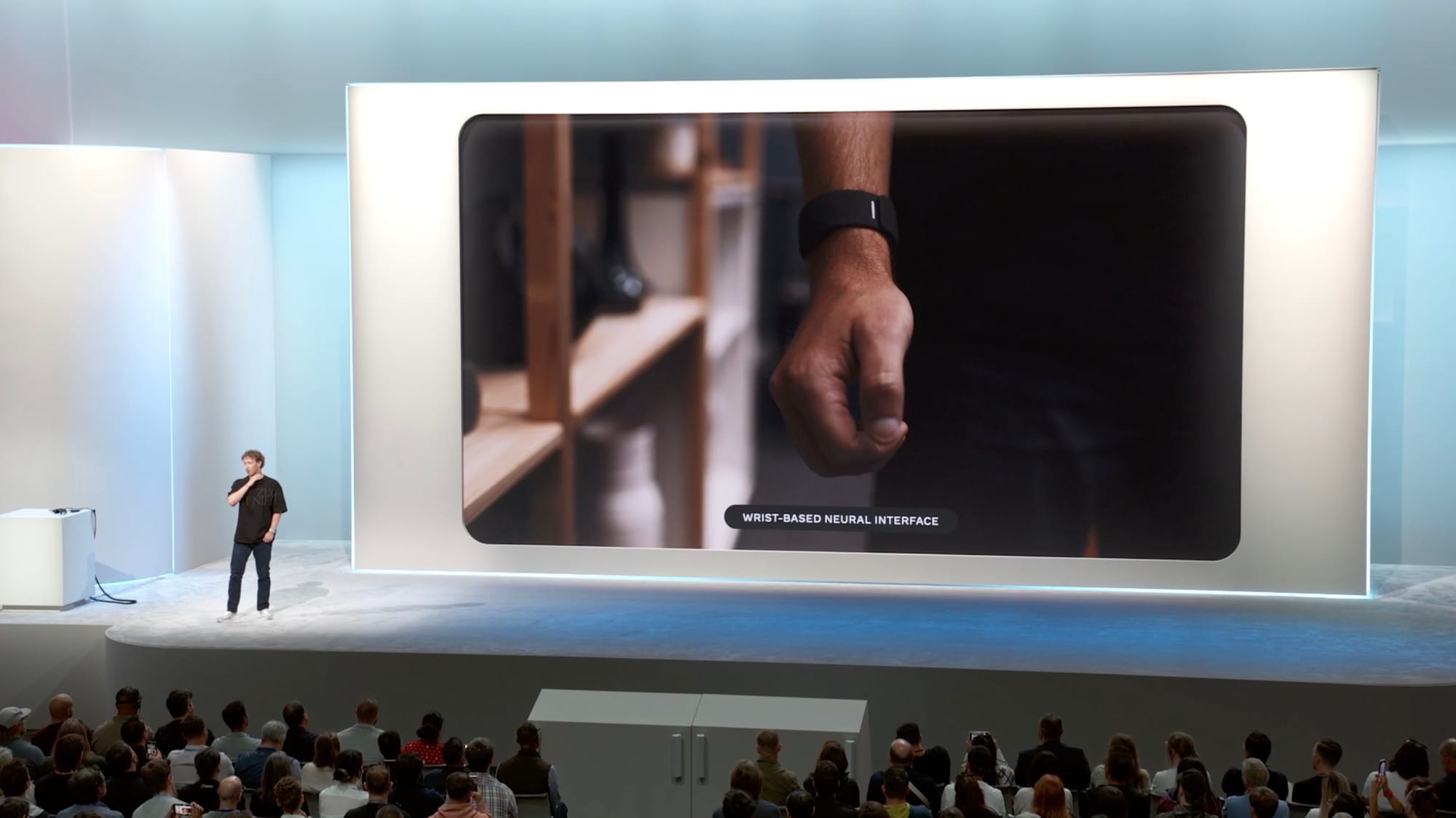

Holographic Glasses and Neuralink Wrist Interface

The most groundbreaking reveal was Meta’s new holographic glasses, designed to compete with Apple Vision. These glasses provide a full VR experience, but with a significant advantage—they’re much smaller and look like normal glasses, without the need for wires. They also come with a Neuralink wrist interface, allowing control through both voice commands and arm gestures.