LumaLabs has recently introduced a new feature called camera control, which allows users to define camera movements in their videos precisely. This feature is similar to what other AI video editors, such as Runway, offer. For instance, Runway’s Gen2 model already supports this functionality, and they are working on bringing the same feature to Gen3.

🎥✨Camera Motion is here in Dream Machine 1.6! Effortlessly direct text-to-video and image-to-video scenes with simple commands! Just type in ‘camera’ to unlock camera motions! Imagine in #LumaDreamMachine 🎬🚀https://t.co/G3HUEBEAcO pic.twitter.com/0kf1kVO5SA

— Luma AI (@LumaLabsAI) September 3, 2024

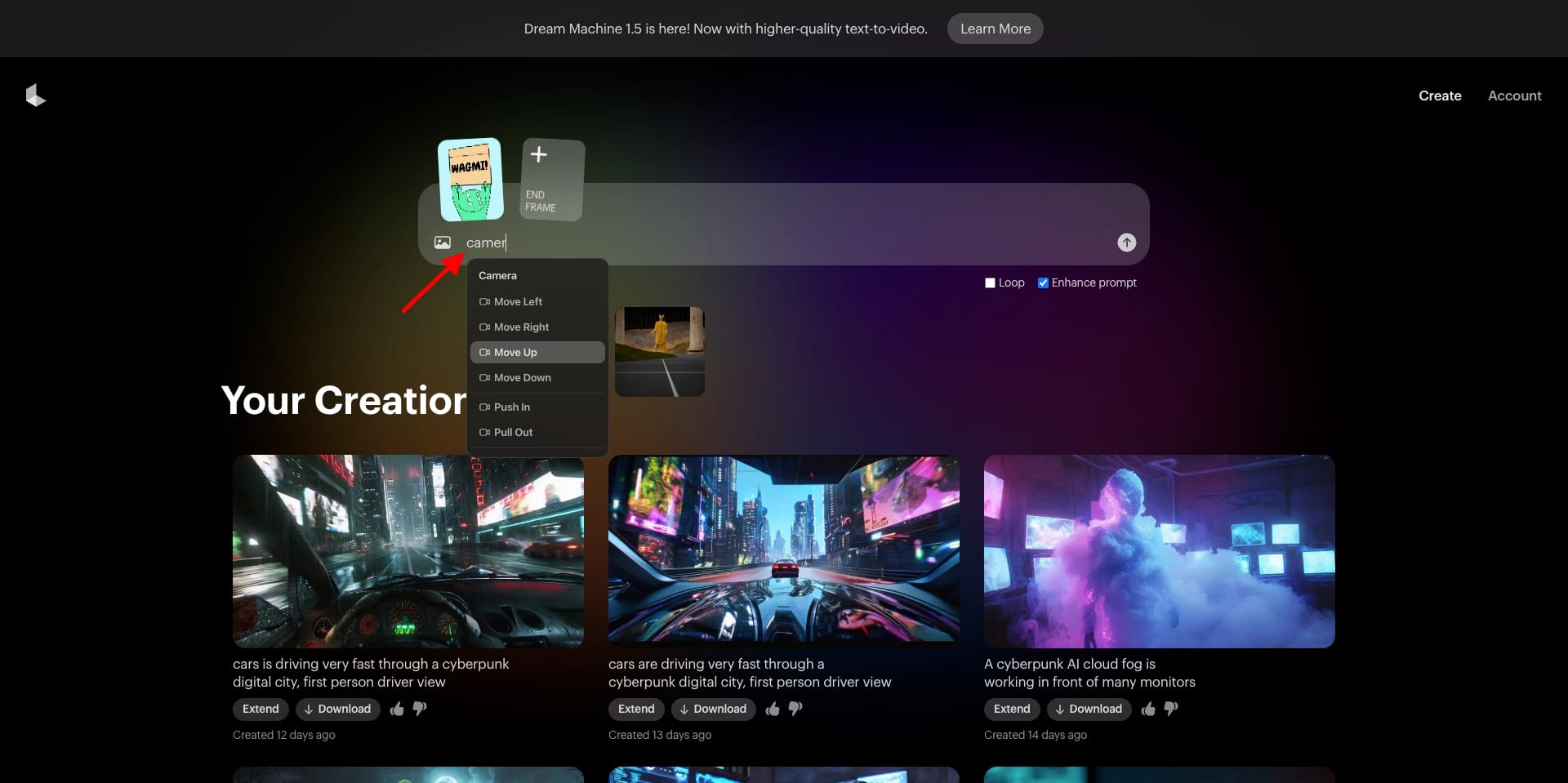

One key difference with LumaLabs is that it offers fewer tools overall compared to other platforms, which has made this camera control feature a long-awaited addition for many creators. To access these controls, users need to type the keyword camera into the prompt field. This action brings up a dropdown menu where users can select specific camera movements. Once chosen, the camera movement is integrated into the prompt, though users can also type the camera instructions directly into the prompt field if they prefer.

Interestingly, LumaLabs seems to have implemented a system that automatically detects these key phrases in the prompt and applies the camera controls accordingly. This design keeps the user interface clean by avoiding an overload of options while maintaining powerful functionality within the prompt itself.