Hume AI has recently announced the release of EVI 2, a foundational voice-to-voice model designed to provide remarkably human-like voice conversations. This model is capable of conversing rapidly and fluently with users, understanding and generating various tones of voice, and even responding to niche requests such as changing its speaking rate or rapping.

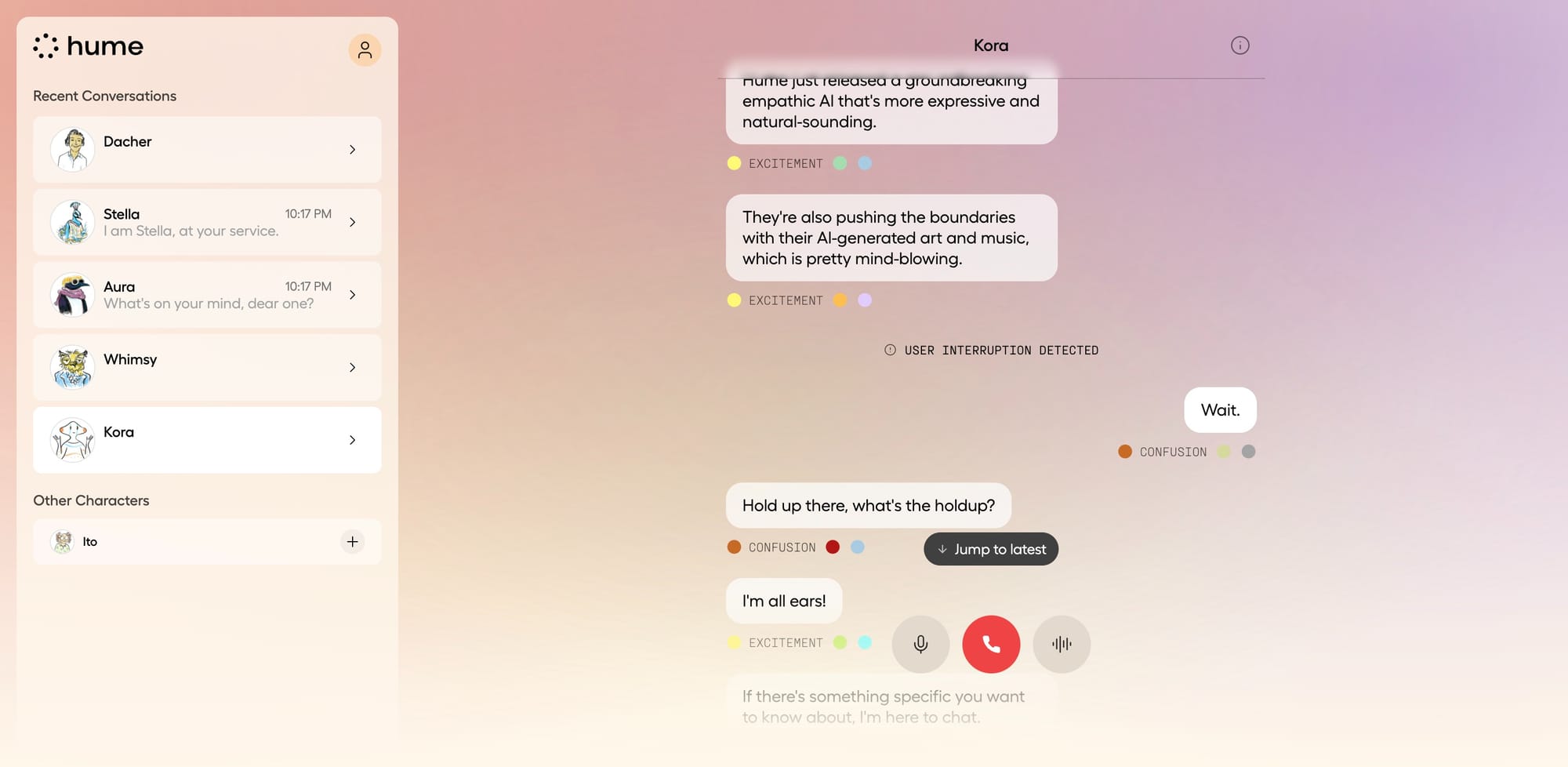

Key features of EVI 2 include its ability to emulate a wide range of personalities, accents, and speaking styles, as well as its emergent multilingual capabilities. It is trained for emotional intelligence, allowing it to anticipate and adapt to user preferences and maintain engaging characters and personalities.

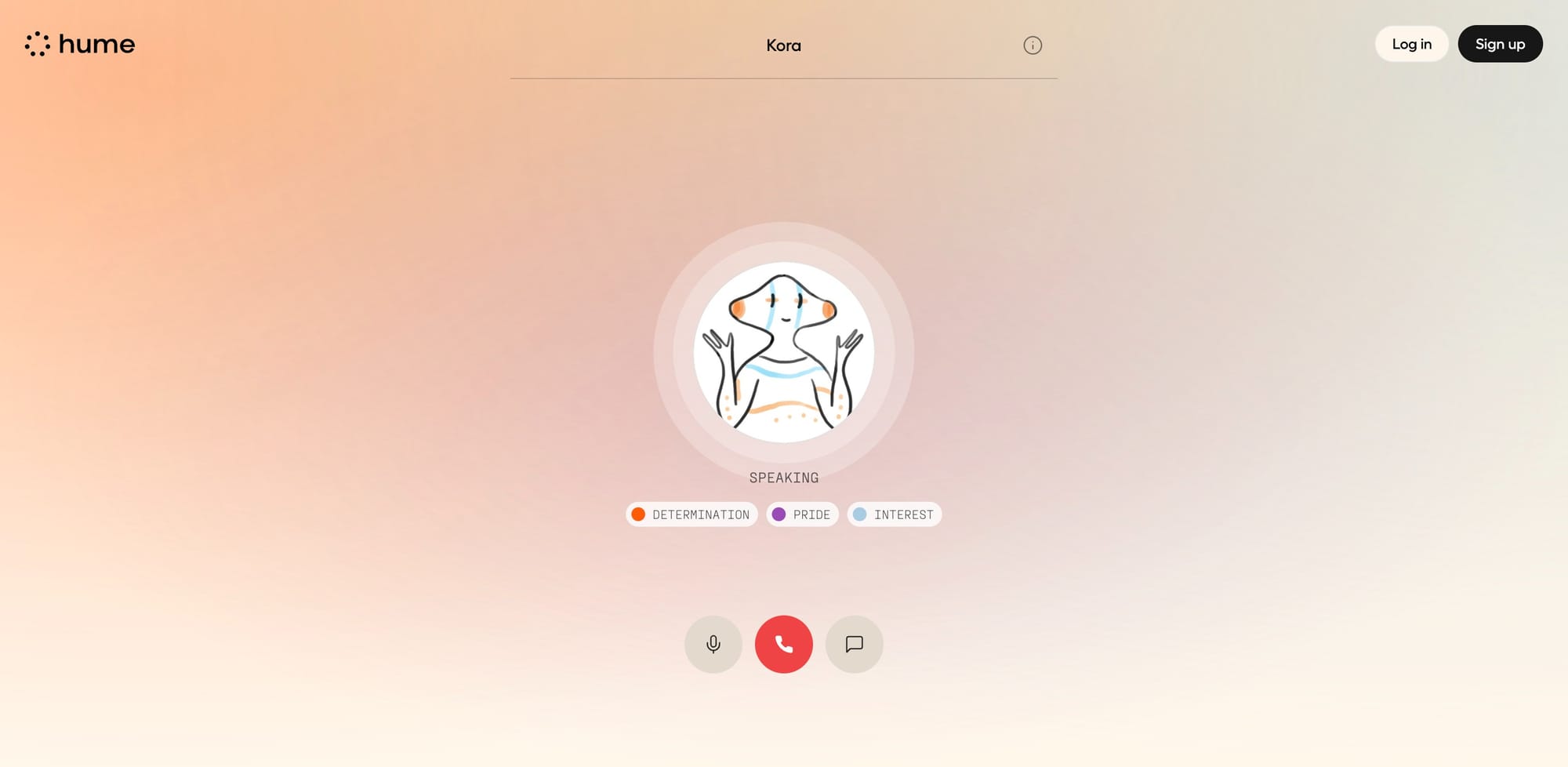

EVI 2 is available in beta for anyone to use, accessible via Hume AI's app and API. Importantly, it is designed to prevent voice cloning without modifications to its code, addressing unique risks associated with this capability. Instead, it offers an experimental voice modulation approach that allows users and developers to create synthetic voices and personalities by adjusting base voices along various scales such as gender, nasality, and pitch.

The current release is EVI-2-small, with ongoing improvements planned to enhance reliability, language learning, and complex instruction following. A larger model, EVI-2-large, is also being fine-tuned and will be announced soon.

Introducing Empathic Voice Interface 2 (EVI 2), our new voice-to-voice foundation model. EVI 2 merges language and voice into a single model trained specifically for emotional intelligence.

— Hume (@hume_ai) September 11, 2024

You can try it and start building today. pic.twitter.com/yqM6ivmkUl

EVI 2 represents a significant step forward in Hume AI's mission to optimize AI for human well-being, focusing on making its voice and personality highly adaptable to maximize user happiness and satisfaction. The model demonstrates that AI optimized for well-being can have a pleasant and fun personality, aligning with user goals and preferences.

In comparison to other models, EVI 2 stands out as one of the first publicly available voice-to-voice AI models, alongside OpenAI's GPT-4o Advanced Voice Mode, which has been previewed to a limited number of users.