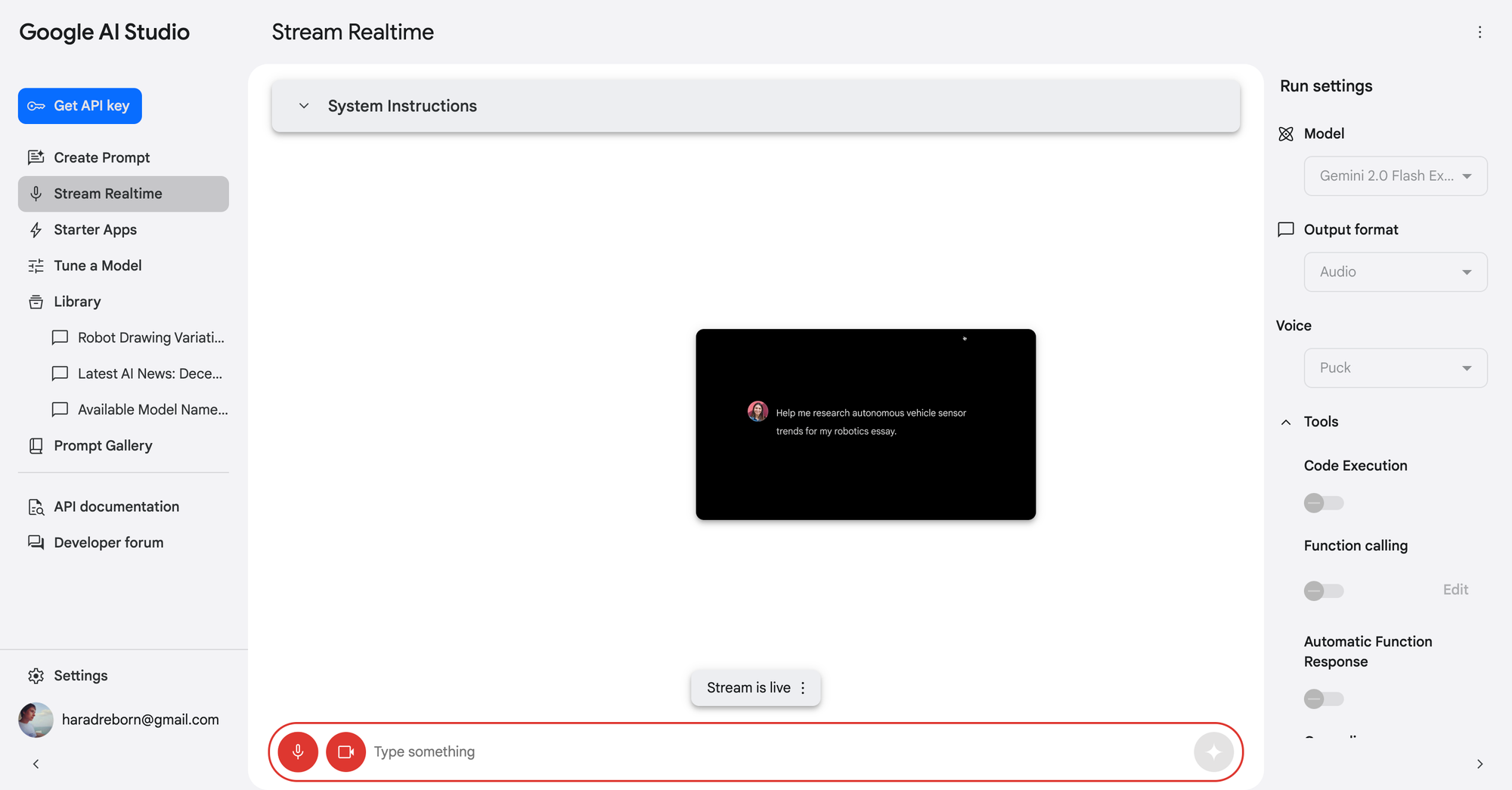

Google AI Studio received a couple of significant updates today. One of them, called Stream Real-Time, allows users to interact with Gemini through multi-modal inputs and outputs. This feature functions like a voice mode with vision capabilities, enabling users to speak to Gemini and receive spoken responses. While the audio functionality is similar to the previously available Gemini Live, it is now powered by the new Gemini 2.0 Flash and offers various output voices for users to choose from.

The standout feature, however, is the vision capability. In this mode, users can screen share or open their cameras to make visual data available to Gemini. This allows Gemini to analyze the screen or camera feed, understand what’s happening, and provide accurate suggestions.

This vision-enabled voice mode is a step ahead of what many expected OpenAI to release, with Google seemingly taking the lead by delivering it first. Although AI Studio remains primarily developer-focused, it is accessible on mobile, making it useful for consumers as well.

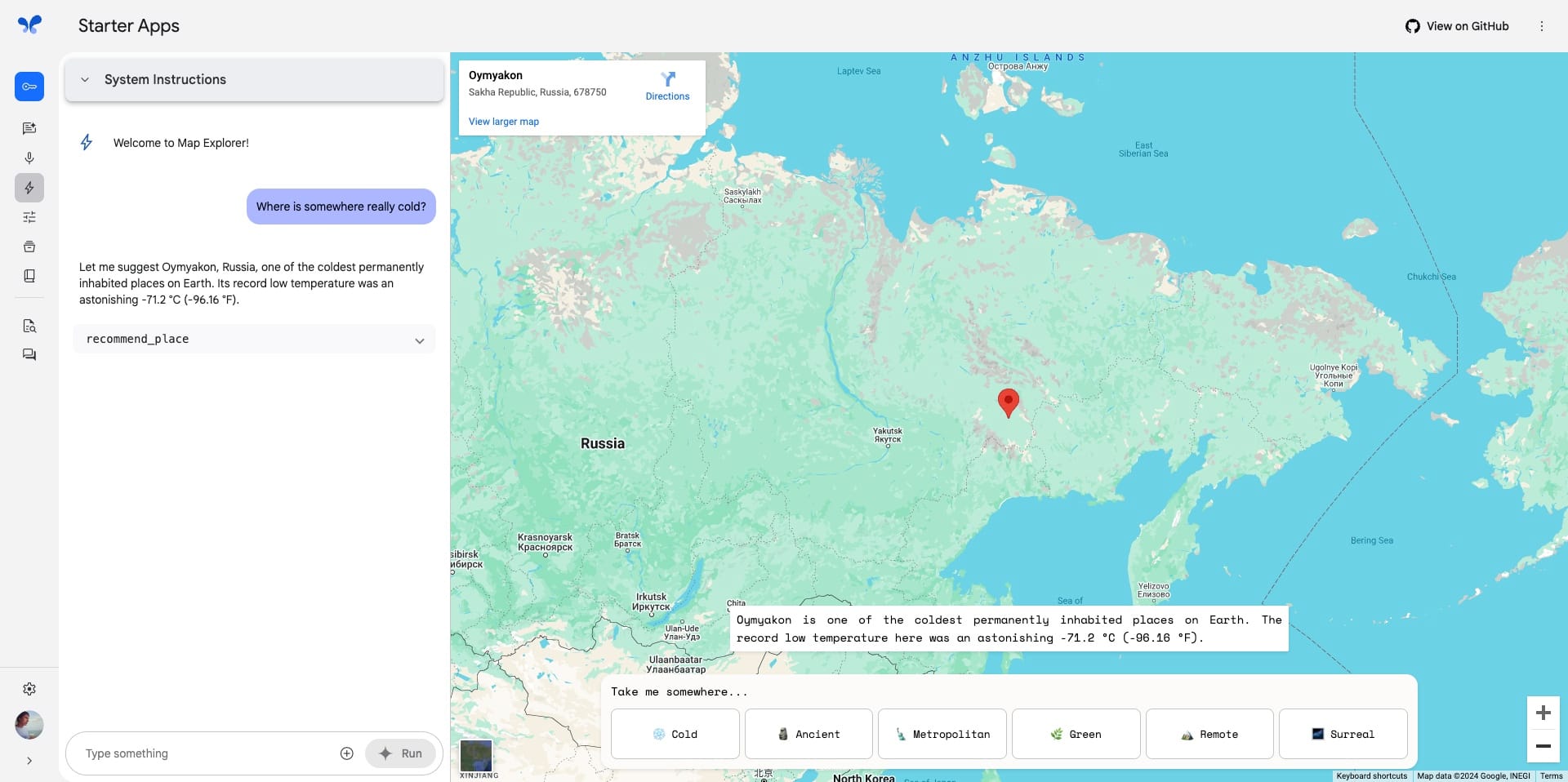

Another update introduced Starter Apps, a catalog of demo projects built using the Gemini API, which are also available on GitHub. These apps can be run and explored directly within AI Studio. One notable Starter App is Map Explorer, where Gemini helps users navigate the app and provides suggestions. Other featured apps include Video Analyzer and Spatial Understanding, which users can try out themselves.

These updates mark a significant leap in AI Studio’s capabilities, bridging the gap between developer tools and consumer-friendly applications.