Time is passing, and users are eagerly awaiting the release or at least the timeline announcement for the new voice model. Everyone expects OpenAI to finish their preparations as soon as possible. On June 17, there were releases for both the iOS app and the desktop app. While there are almost no visible changes, some significant updates hint at how the new voice mode might function.

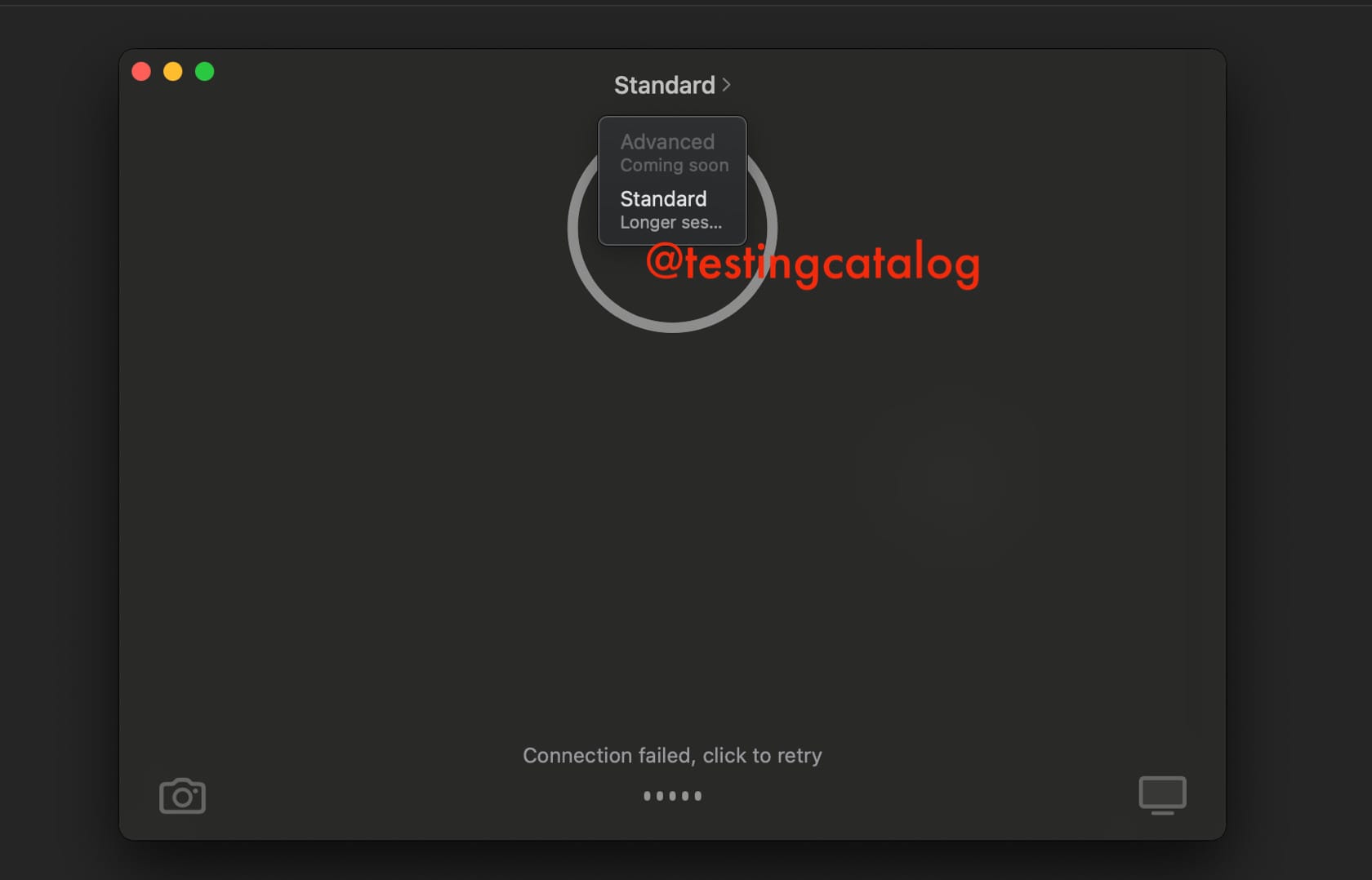

BREAKING: New details regarding the upcoming ChatGPT voice release: In the latest iOS build, users can select between the Standard and Advanced modes.

— TestingCatalog News 🗞 (@testingcatalog) June 17, 2024

Advanced mode is labelled as "Coming soon" and the Santard option is meant for "Longer sessions".

What does it mean?

1. This… pic.twitter.com/I6jFICQx5r

In the new voice UI, currently hidden from users, a small change introduces a drop-down menu where users can select between standard and advanced modes. The standard mode is labeled suitable for longer sessions, while the advanced mode is marked as "coming soon."

This likely means two things:

- The new UI might be released broadly to users before the new voice mode is available, with the voice mode rolling out gradually later.

- The rate limits for the new voice mode might differ from those of GPT-4.0. Some users speculate it will have only a 2000 token context window, or it might run on more powerful NVIDIA GPUs, but this remains unclear.

The desktop app for MacOS also received an upgrade that includes the toggle between standard and advanced modes.

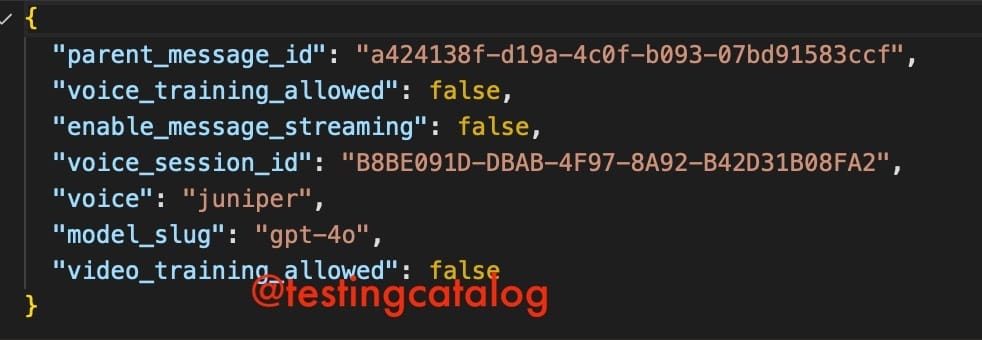

Looking at the API, future updates might include an option to enable video training, similar to the current options for disabling training on voice. This toggle will be separate from the ones for disabling text and voice training.

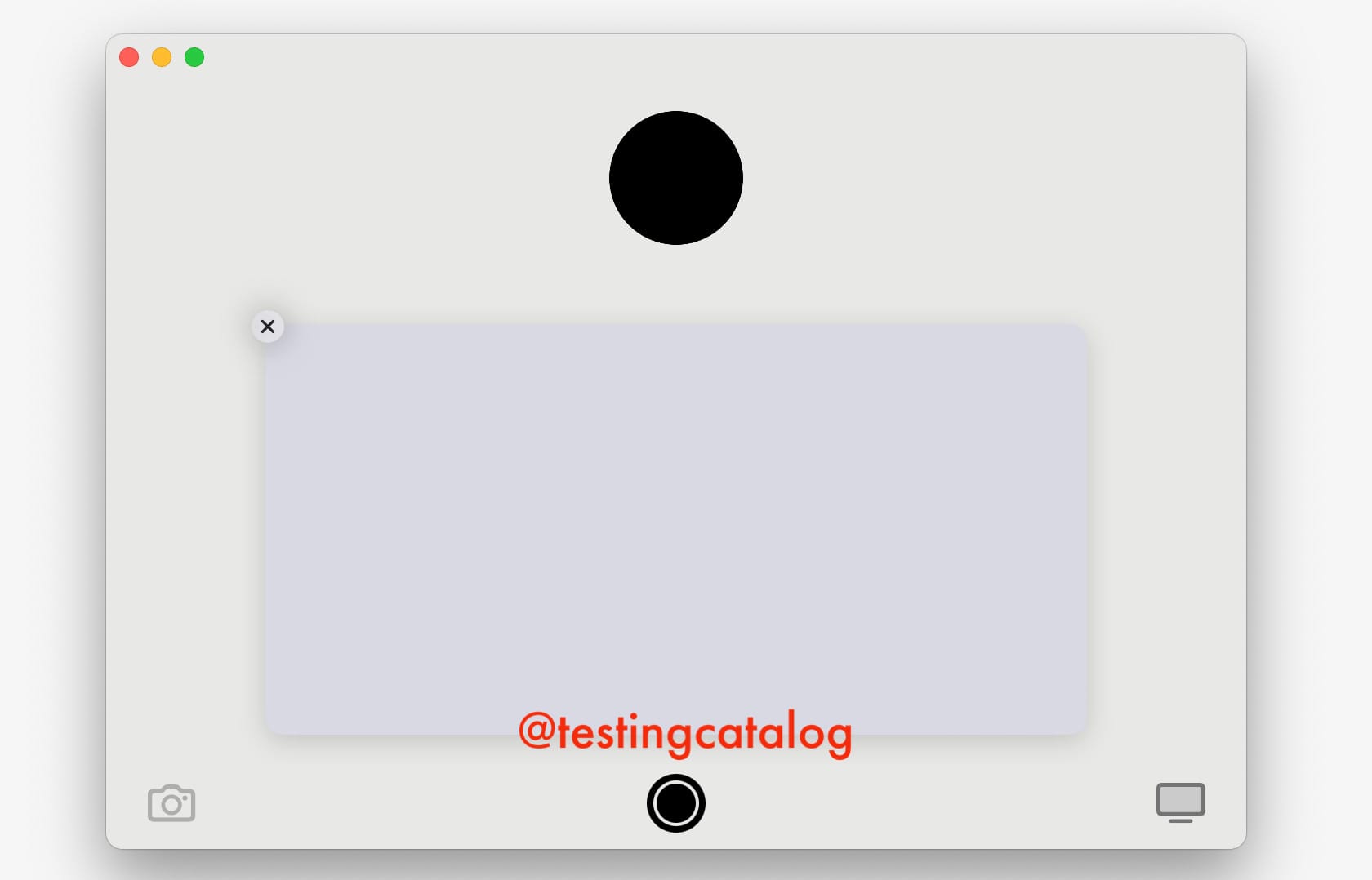

One minor but important change is that image previews in a larger view are now working properly, addressing a significant issue for the public release. However, there is still an issue with the GPT store where opening a GPT from the store results in a small, non-draggable pop-up.

It turns out that the ChatGPT desktop app has a working image preview in its latest build. However, it only works 1 out of 20 times 🤤 pic.twitter.com/moJ422IhqK

— TestingCatalog News 🗞 (@testingcatalog) June 12, 2024

In the new voice UI’s camera mode on the desktop, a new button allows users to take photos. Previously, ChatGPT only had access to the live camera view. This photo-taking feature is now the same as in the iOS app, indicating that users might use the new UI without full access to multi-model or omni-model capabilities. This is an additional indication of potential usage without full access to these capabilities.

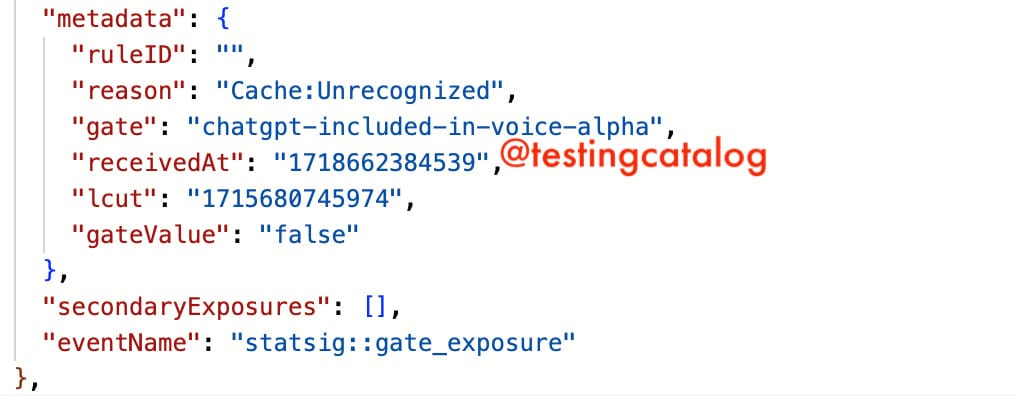

Regarding alpha testing, there are signs it might start soon, but it will likely be limited to a small group, possibly with regional restrictions. Given recent downtimes, the rollout may take longer than expected.

The beginning of alpha testing doesn't guarantee immediate access; it’s probably undergoing internal testing on production systems. Afterwards, it might expand to friends & family and contractors who support testing under NDA.

In case you are wondering how the new Voice UI will look on MackOS 👇

BREAKING: The MacOS 2.0 ChatGPT app upgrade is NOT a "Nothingburger" as it seems at first glance. Check this new UI 👇

— TestingCatalog News 🗞 (@testingcatalog) June 10, 2024

It already comes with a properly working conversational UI you all have seen on the OpenAI demo.

- It will very likely always appear in a separate window… pic.twitter.com/ZHBGQAmlmn

Is an announcement possible by the end of this week? Yes, but it’s still unlikely we’ll get immediate access to this new feature.