Anthropic has introduced new features in their developer Console to streamline the process of creating, testing, and evaluating AI prompts. Here are the key details:

Prompt Generation:

- The Console now includes a built-in prompt generator powered by Claude 3.5 Sonnet.

- Users can describe a task (e.g. "Triage inbound customer support requests") and Claude will generate a high-quality prompt template.

- The generated prompts use handlebars notation for variables (e.g. ).

Test Case Generation:

- Users can automatically generate input variables for prompts, such as example customer support messages.

- Test cases can be manually added, imported from CSV, or auto-generated by Claude.

- The generation logic is customizable and adapts to existing test sets.

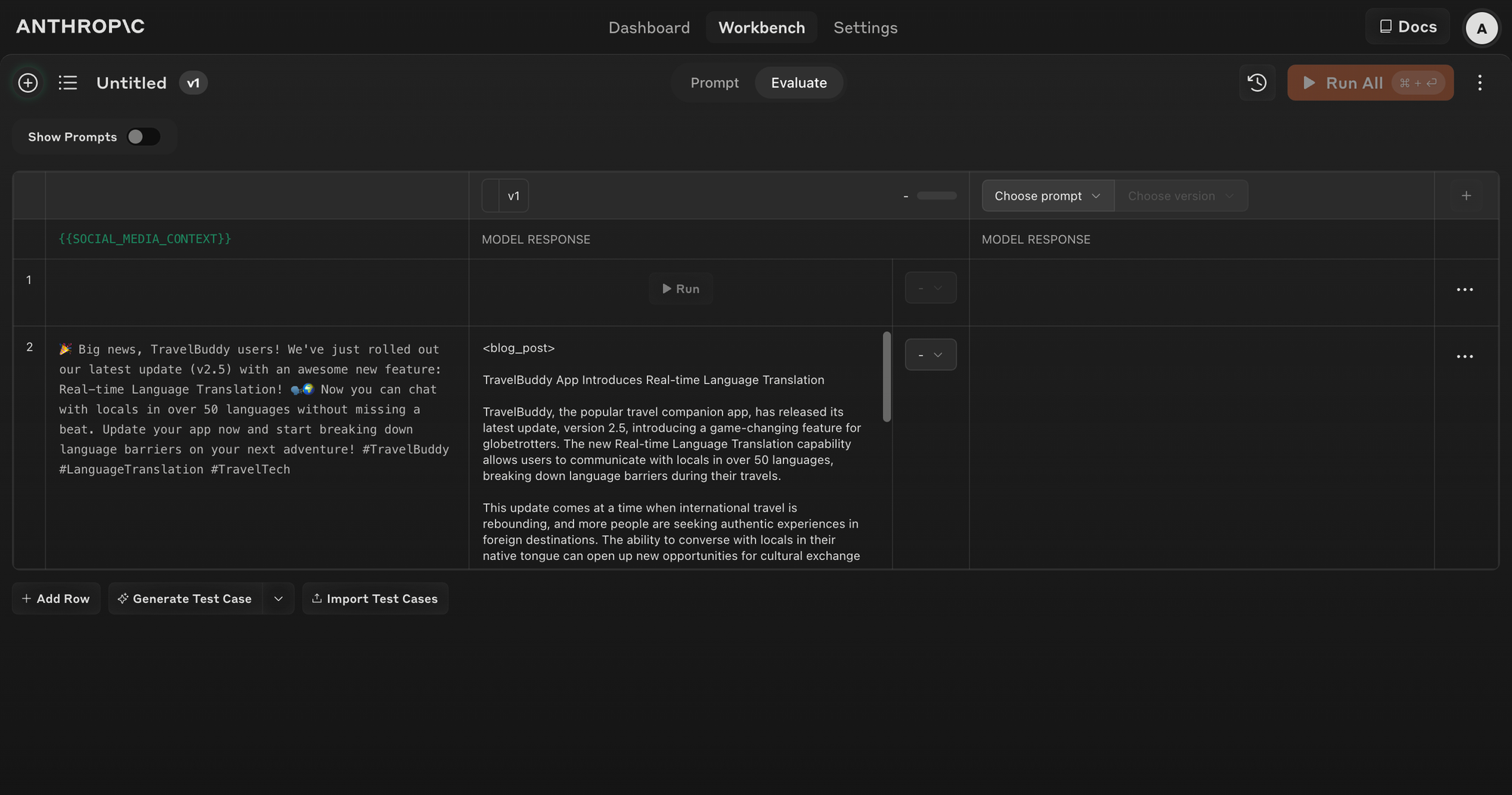

Prompt Evaluation:

- A new "Evaluate" feature allows testing prompts against multiple inputs directly in the Console.

- Users can run all test cases with a single click to see how prompts perform across scenarios.

- Prompts can be iterated on by creating new versions and re-running the test suite.

Output Comparison:

- Side-by-side comparison of outputs from two or more prompts is now possible.

- This helps users understand how changes to prompts affect the results.

Quality Grading:

- Subject matter experts can rate response quality on a 5-point scale.

- This helps determine if changes have improved response quality.

We've added new features to the Anthropic Console.

— Anthropic (@AnthropicAI) July 9, 2024

Claude can generate prompts, create test variables, and show you the outputs of prompts side by side. pic.twitter.com/H6THdoja7Z

These features aim to make prompt engineering more accessible and efficient, especially for those new to the process. The prompt generator and evaluation tools can help users create more effective prompts and improve AI model outputs more quickly.

Anthropic highlights that while generated prompts may not always be perfect, they often outperform hand-written prompts from novice users.

These new features are available to all users on the Anthropic Console, with documentation and tutorials provided on their website.