Anthropic appears to be preparing a major upgrade to its Claude 3.7 Sonnet model, increasing the context window from 200K tokens to an unprecedented 500K tokens. Evidence of this feature has surfaced in newly added feature flags, suggesting that the rollout may be imminent. This expanded context window would allow users to input and process significantly larger datasets or codebases in a single session, potentially transforming workflows in enterprise applications and coding environments.

BREAKING 🚨: Anthropic is preparing to release a new version of Claude Sonnet 3.7 with a 500K Context Window (Current is 200K). pic.twitter.com/LUdFmllcne

— TestingCatalog News 🗞 (@testingcatalog) March 26, 2025

The leaked features point to a substantial leap in Claude's capabilities. A 500K token context window would enable processing of vast amounts of information without reliance on retrieval-augmented generation (RAG), which can sometimes distort context order. This could prove invaluable for tasks like analyzing large political document collections, aggregating summaries, or managing complex codebases with hundreds of thousands of lines of code. However, concerns about the model's ability to effectively utilize such a large context remain, as larger windows often introduce challenges related to memory hardware and computational costs.

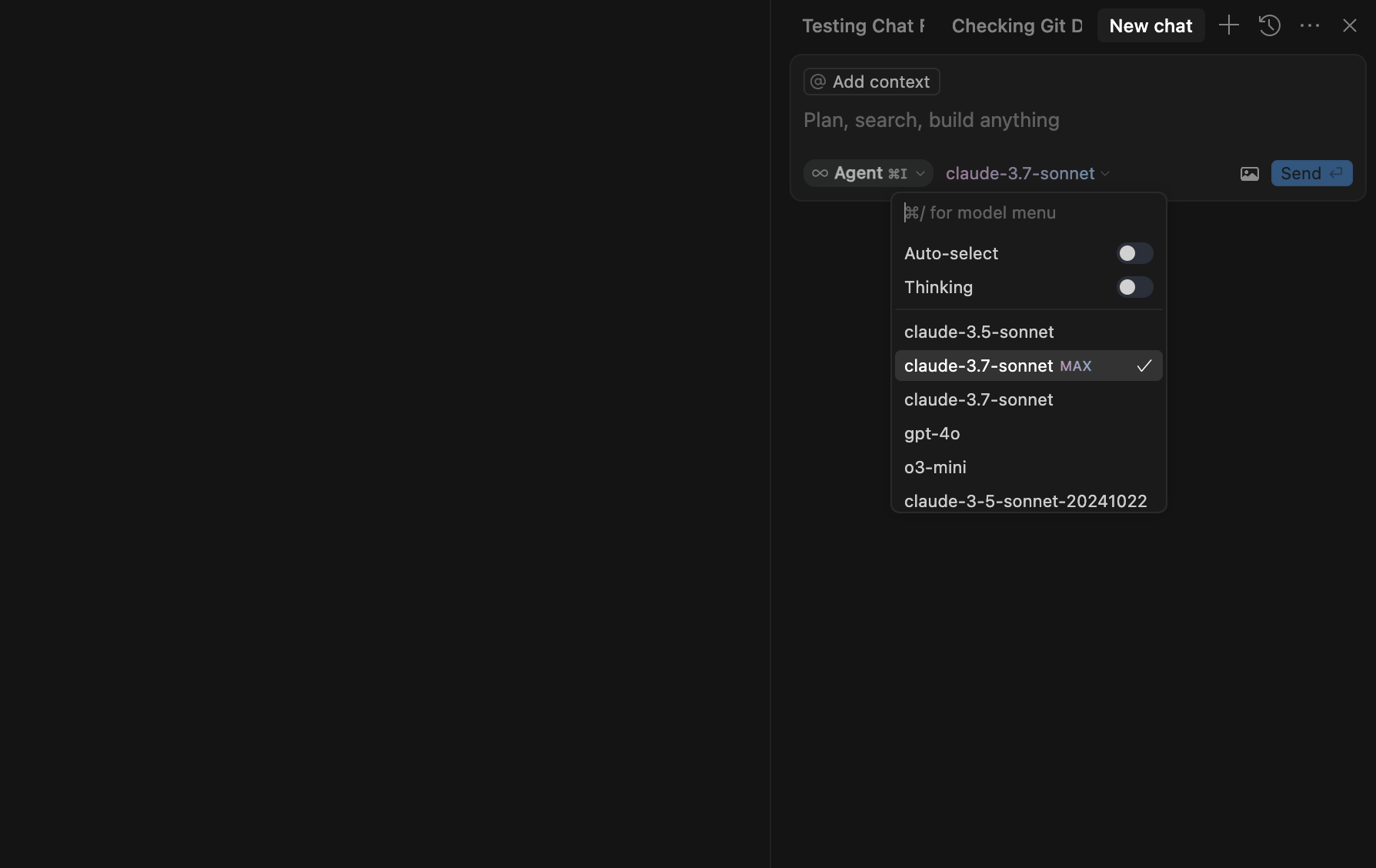

It is yet unclear if this will be offered to Enterprise customers only, as some reports suggest it is already the case. For example, Cursor recently unveiled the "Claude Sonnet 3.7 MAX" option in its IDE 👀

The company behind it, Anthropic, has positioned itself as a leader in AI safety and practical applications. Claude 3.7 Sonnet is already optimized for real-world use cases, including coding and reasoning tasks. This upgrade aligns with Anthropic's focus on enterprise solutions and its strategy to compete with other models offering extensive context capabilities, such as Google's Gemini series.

The timing of this development coincides with the rise of "vibe coding," a term coined by Andrej Karpathy earlier this year. Vibe coding relies on AI to generate code based on natural language descriptions rather than manual programming. The expanded context window could further empower this paradigm by enabling developers to work on larger projects without breaking context or restarting sessions due to token limits. This shift could democratize coding, making it accessible to non-programmers while accelerating workflows for professionals.

While the exact release date for the 500K token model for Claude is unclear, Anthropic has historically introduced new features first through enterprise plans before broader availability. If implemented widely, this upgrade could redefine the capabilities of large language models in coding, research, and data analysis across industries.